Epsilon-Greedy Algorithm

Part of the MAB series , explains how it balances exploration vs exploitation

In our previous article to understand MAB, I explained MAB. Here I will focus on explaining ε - Greedy (pronounced as Epsilon Greedy). It is one of the variants, we have discussed in the MAB introduction article.

This article is part of the MAB series of articles.

Brief:

Multi-armed bandit (MAB) problems are a class of decision-making tasks where an agent must choose between multiple actions (arms) to maximize a cumulative reward over time.

Epsilon Greedy is one such algorithm that balances exploration and exploitation to select the best arm.

ε-Greedy (Epsilon Greedy)

What is ε-Greedy?

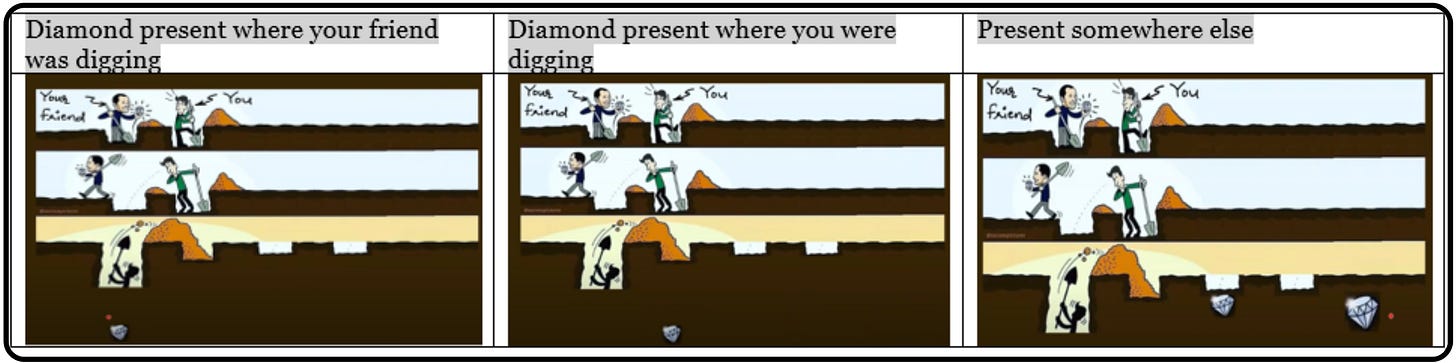

ε-Greedy is a straightforward and effective approach within Multi-Armed Bandits (MAB) that helps us choose the best recommendation algorithm out of several options. It cleverly balances trying out new algorithms (exploration) and sticking with the best-known one (exploitation).

How ε-Greedy Works:

Setting ε (epsilon): We start by choosing a small value for ε (epsilon) generally 10% or 0.10. This value represents how often we want to explore new algorithms versus exploiting the best-known algorithm.

Initial Exploration: In the beginning, we're curious about all the algorithms. So, with probability ε, we randomly select one of the algorithms (exploration). This helps us gather data about their performance.

Exploitation: The rest of the time, with probability (1 - ε), we choose the algorithm that has performed the best so far (exploitation). This means we stick with what we know works well based on the data we've collected.

Learning and Adapting: As we keep using this approach, we learn more about which algorithm is the most effective because we're constantly exploring and exploiting. Over time, ε-Greedy tends to direct us more towards the best-performing algorithm.

Benefits of ε-Greedy in Choosing the Best Algorithm:

Continuous Learning: ε-Greedy ensures we don't lock ourselves into a single algorithm. We keep exploring to find hidden gems while exploiting the current best option.

Adaptive: It adapts to changing user preferences. If a new algorithm proves to be better, ε-Greedy will gradually allocate more users to it.

Simple and Effective: ε-Greedy is easy to understand and implement, making it a practical choice for many scenarios.

By following ε-Greedy, we gradually learn which recommendation algorithm works best based on real user interactions. This approach is valuable when we have multiple options and want to make data-driven decisions to improve user engagement and conversion rates on our website or application.

Simulating the Epsilon Greedy algorithm to select the best algorithm out of all 5 available recommenders:

This code demonstrates a simplified epsilon-greedy multi-armed bandit algorithm that explores and exploits different algorithms (arms) to maximize rewards in a simulated scenario. The focus is on understanding how exploration and exploitation work in the context of MAB algorithms.

import random

# Define the number of arms (algorithms)

num_arms = 5

# Define the true reward probabilities for each algorithm

# In real time we are not aware of these true rewards so we start with

# equal probabilities, something like, 0.5

true_rewards = [0.8, 0.6, 0.7, 0.4, 0.9]

# Initialize variables

epsilon = 0.1 # Exploration parameter (you can adjust this)

num_rounds = 1000

arm_selections = [0] * num_arms

arm_rewards = [0] * num_arms

# Main epsilon-greedy multi-armed bandit algorithm loop

for round in range(1, num_rounds + 1):

if random.random() < epsilon:

# Exploration: Choose a random arm (algorithm)

selected_arm = random.randint(0, num_arms - 1)

else:

# Exploitation: Choose the arm with the highest estimated reward

selected_arm = arm_rewards.index(max(arm_rewards))

# Simulate recommending an article using the selected algorithm

# Here, we assume a click happens with a probability equal to the true reward of the selected arm

reward = random.random() < true_rewards[selected_arm]

# Update statistics for the selected arm

arm_selections[selected_arm] += 1

arm_rewards[selected_arm] += reward

# Print the estimated rewards (click-through rates) for each algorithm

for arm in range(num_arms):

estimated_reward = arm_rewards[arm] / arm_selections[arm] if arm_selections[arm] > 0 else 0

print(f"Algorithm {arm + 1}: Estimated Click-Through Rate = {estimated_reward:.4f}")

# Print the algorithm that was chosen the most

most_selected_arm = arm_selections.index(max(arm_selections))

print(f"Algorithm {most_selected_arm + 1} was selected the most.")

Let us summarize the entire code:

We define the number of arms (algorithms) and their true reward probabilities, which represent the actual success rates of each algorithm.

We initialize some variables, including the exploration parameter

epsilon, the number of roundsnum_rounds, and lists to keep track of arm selections and rewards.We enter the main loop, where we simulate interactions over

num_roundsrounds.In each round, we decide whether to explore or exploit based on

Epsilon. If a random number is less than Epsilon, we explore by selecting a random arm; otherwise, we exploit by choosing the arm with the highest estimated reward.We then simulate recommending an article using the selected algorithm. A reward of 1 is given if the user clicks (based on the true reward probability of the selected arm); otherwise, a reward of 0 is given.

We update statistics for the selected arm, including the number of selections and cumulative rewards.

After all rounds, we calculate the estimated click-through rate (reward rate) for each algorithm and print it.

We also identify which algorithm was selected the most throughout the simulation.

Conclusion:

Epsilon-greedy, with its straightforward approach to balancing exploration and exploitation, is a valuable starting point in Multi-Armed Bandit problems. Yet, it has limitations in terms of slow learning and suboptimal rewards in complex scenarios. Advanced algorithms like UCB1 and Thompson Sampling outperform it by intelligently exploring and exploiting arms. Epsilon-greedy serves as a basic benchmark, but for optimal results, consider more sophisticated alternatives when dealing with intricate MAB challenges.